NO.181 Programming Language Support for Emerging Memory Technologies

May 7 - 10, 2024 (Check-in: May 6, 2024 )

Organizers

- Peter Braam

- University of Oxford, UK

- Jeremy Gibbons

- University of Oxford, UK

- Oleg Kiselyov

- Tohoku University, Japan

Overview

Apart from the official NII Shonan Meeting webpage, the seminar organizers have created the own seminar webpage for the participants as below,

*You will be transferred to an external website by clicking the link above.

Description of the Meeting

History and summary goal

Optimal placement and movement of data between storage, memory and processing components has been the main driver for higher performance and lower energy consumption of all software. Attaining (or even approaching) optimality requires an accurate model. A flat homogeneous address space abstraction (the von Neumann model), presented by hardware and by the hardware abstraction layers of the OS/runtime, is becoming harder and harder to maintain and is less and less accurate as a model.

What new abstractions should compiler writers and programmers use instead? In the emerging landscape of new memory devices, with radically different processing elements in systems spanning from super-computers to IoT devices, how can we save users from being overwhelmed with the diversity and complexity of memory architectures? Conversely, how can we give programmers and compiler writers some idea of the memory architecture and some degree of control over data placement and movement?

Industry trends and state of the art

A revolution in the electronics and computer industry has started to deliver hybrid, widely diverse kinds of memory, such as off-package persistent (e.g. Intel’s Optane Memory) and many forms of volatile RAM, onpackage RAM (e.g. High Bandwidth Memory), and logic integrated on-chip persistent RAM. The kinds of memory becoming available show orders of magnitude of diversity in capacity, latency and throughput. Some kinds are already used as a cache generally controlled by hardware, others as part of the address spaces for traditional processors, while other memory is seen as a long term storage device or may interact with processors with very different instruction sets sometimes without load/store mechanisms (e.g. machine learning accelerators such as Google’s Tensor Processing Units, and with GPUs).

Limited support using hardware instructions, operating systems and low level libraries has been introduced, for example for cache coherency control, selective cache flush mechanisms and NUMA addressability. The highperformance computing community is familiar with analysing performance, and creating generally non-portable ad-hoc optimizations using fragile pragmas and qualifiers with poor diagnostics.

Compilers for mainstream high-level languages like Java, Swift, Golang, Scala, Python, Ruby, Javascript, OCaml and Haskell provide the user with the illusion of a flat address space, which fits well with the calculi and abstract machines that underlie programming language research. In practice, such languages rarely deliver good performance out of the box; they have widely varying features to optimize instruction scheduling, but much less developed memory management, and inspire many duplicated efforts between different compilers. A key disconnect between the programming languages, computer architecture, and high-performance computing communities may be a root cause.

Domain Specific Languages (DSLs) have delivered promising results, and in some cases (e.g. Halide and Tensorflow) include automatic and guided analysis and interfaces for data placement and movement. But the high-level specifications of such DSL’s (including memory specifications) are too model-specific, and so are hard to extend to different domains and to generalize.

Approaching key problems

The major opportunities offered by the new technologies are difficult to exploit with present-day programming languages:

- persistent-memory transactions (as introduced by Intel) have non- compositional lock management properties;

- large core counts make cache coherency electronically challenging;

- tier management of memory and placement and movement policies are in their infancy;

- automatic portability for support of persistent and non-persistent memory semantics in libraries appears elusive, even for structures as simple as a doubly-linked list, and leveraging persistent memory for the creation of programs that resume after interruptions is currently beyond reach.

Approaches to these problems are wide open. In order to enable control, a discipline is needed: defining types to describe computer architectures, and providing methods to relate these with program data structures.Beyond control, we need a systematic approach to automatic optimization, possibly addressed as an optimization problem accessible to automated solver systems or machine learning, as is done in some DSL’s and in physical micro-electronics layout design systems. The control should be at a high level, with diagnostics e.g. for already wrongly situated data.

To validate the ideas that we seek to describe, numerous opportunities exist, mostly unexplored. For example, one could demonstrate that unmodified NumPy code gains improved performance when Python observes data access patterns in its garbage collector and leverages them for data placement in memory tiers. New compiler developments like MLIR may allow an implementation of the ideas in high level languages. To demonstrate parity with the HPC community in terms of performance, the fully understood performance model of the HPCG benchmark and the high-level language approaches we envision could be shown to be equivalent.

Structure of the workshop

The goal of our workshop is to contribute towards a systematic, scientific understanding of the research roadmap ahead of us in this exciting field. During the workshop, following most elements of the Quality Attribute Workshop process and some from the Architecture Tradeoff Analysis Method (ATAM) from Carnegie Mellon University’s Software Engineering Institute (SEI), we will gather requirements and focus areas in full-group facilitated discussions, come to understand alternatives through short presentations from participants, and work in breakout groups to refine key points. We do not expect to publish a book on the topic, but will collect key findings in the seminar report, which will also contain some tables and key points created during the course of the aforementioned processes.

We anticipate that the workshop participants will consist of three groups of people: specialists in memory technologies, leaders in specific domains in which sophisticated memory management is being used, and computer scientists with a solid foundation in the structure of languages and compilers.

Report

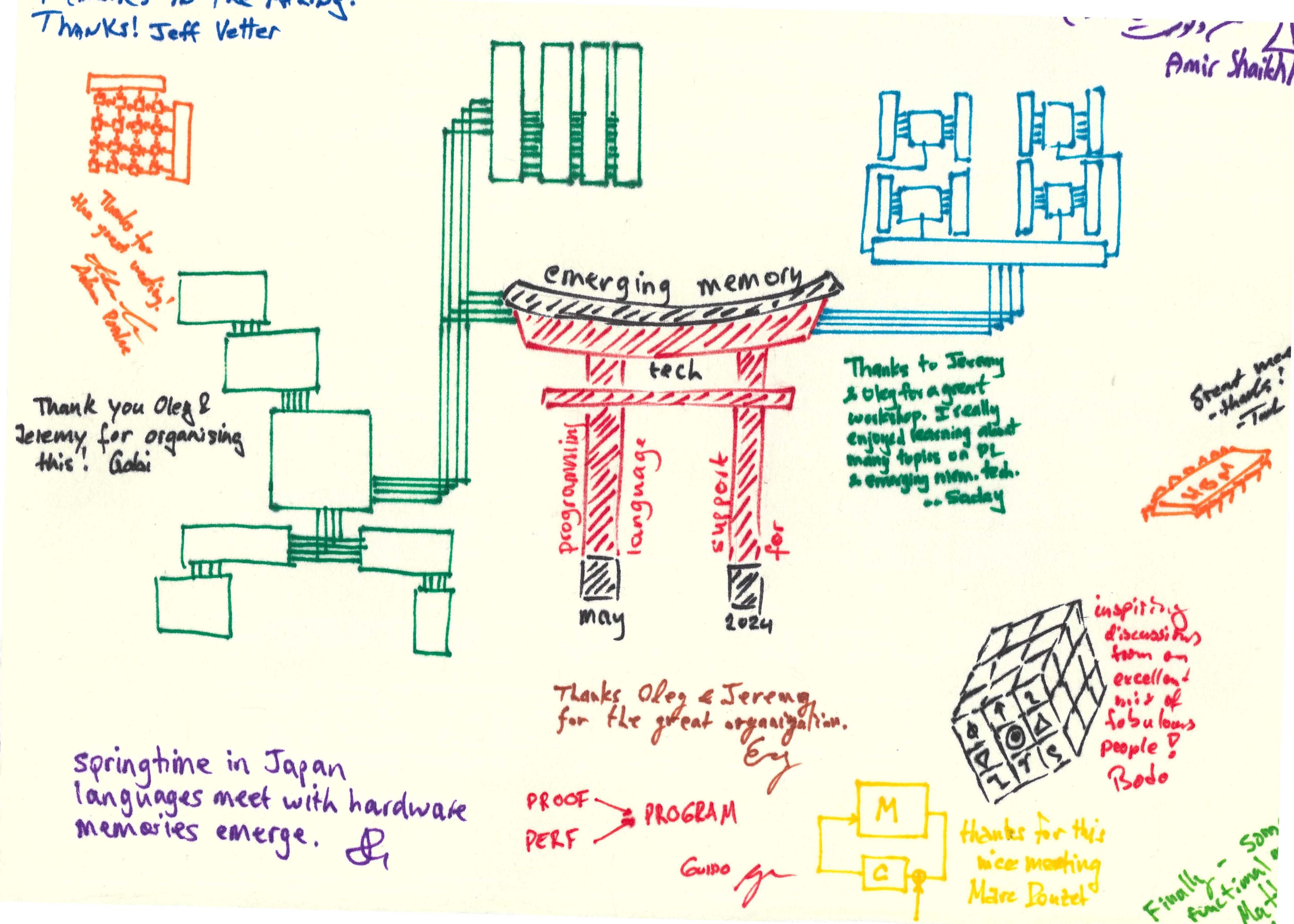

Message Board